Failure to order diagnostic tests when indicated, or misinterpreting their results, can lead to diagnostic errors and adverse outcomes.1 In contrast, overuse of tests generates more false positive results, increases risk of immediate harm (eg, allergic contrast reaction) and promotes overdiagnosis of benign incidental abnormalities, resulting in unnecessary disease labelling and further test and treatment cascades.2

Estimates of the appropriateness of diagnostic investigations vary considerably according to clinical setting, test choice and study methodology. In one meta‐analysis of primary care studies that compared test use against scenario‐specific guideline standards, echocardiography was underused (54–89%) and overused (77–92%), while pulmonary function tests were underused (38–78%) and urine cultures overused (36–77%).3 In another meta‐analysis of hospital‐based studies, overall underuse and overuse rates of laboratory testing were 45% (95% confidence interval [CI], 34–56%) and 21% (95% CI, 16–25%) respectively.4

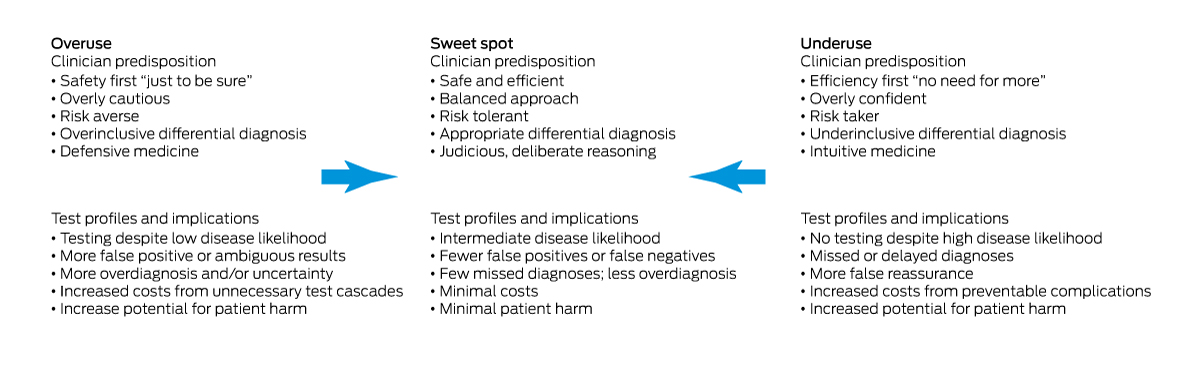

Increasing availability and use of a greater range of pathology and imaging investigations, medicolegal concerns arising from undertesting, few disincentives for overtesting, time pressures, patient expectations, and unawareness of new evidence all promote inappropriate test use.5 Innate risk tolerance, personality traits and cognitive biases of clinicians also influence test requesting behaviours.6 This perspective article describes the drivers of inappropriate use and proposes strategies for achieving the “sweet spot” in using diagnostic tests (Box 1).

Questioning the role of testing

Extensive testing is often not required in making a provisional diagnosis. Instead, attentive listening, pertinent history taking and focused physical examination are often more informative than multiple tests in arriving at a correct diagnosis.7 Inconclusive results of unwarranted tests may exacerbate diagnostic uncertainty,8 provoking further unnecessary testing. Affording clinicians more time and support to adequately perform basic clinical tasks is paramount.9 Re‐engineering electronic medical records and laboratory reporting systems to enable more efficient searching for past diagnosis lists and test results10 could also reduce diagnostic confusion and unnecessary testing, especially repeat requests of the same test.

The “test of time” rather than more tests is often useful in diagnostic decisions relating to non‐acute, non‐urgent, clinically undifferentiated presentations. In general practice and emergency departments, up to one‐half of symptoms defy definitive diagnosis on first encounters.11 Between 75% and 80% of such symptoms improve over four to 12 weeks, usually regardless of medical intervention.10 Patients with “medically unexplained symptoms” should be spared exhaustive and fruitless testing for underlying rare diseases, and where normal results may still not allay patient discomfort and anxiety.12 Alternative strategies are reassuring such patients about what they do not have, controlling troublesome symptoms, observing over time and seeking, if necessary, a second opinion.

Choosing tests according to patient preferences and treatment options

Patient‐centred diagnostic testing requires asking if the test is appropriate given, first, the evidence of harms and benefits for its intended use in this patient and, second, the level of concordance between harm–benefit trade‐offs and patient preferences.13 Before testing, patients should be advised that no test is infallible (ie, false positive and negative results can occur), management options consequent to positive, negative or inconclusive findings should be discussed, and waiting times for tests to be performed and results to be available should be estimated and communicated.

Using tests to confirm a clinically likely diagnosis is less valuable if no therapy is available for that diagnosis, more diagnostic certainty is not needed to determine further management, or patients will decline treatment for certain diagnoses if present (eg, chemotherapy and surgery for underlying cancer). However, in selected cases, using tests to assess disease severity may assist prognostication.

Understanding pre‐test probability and test performance

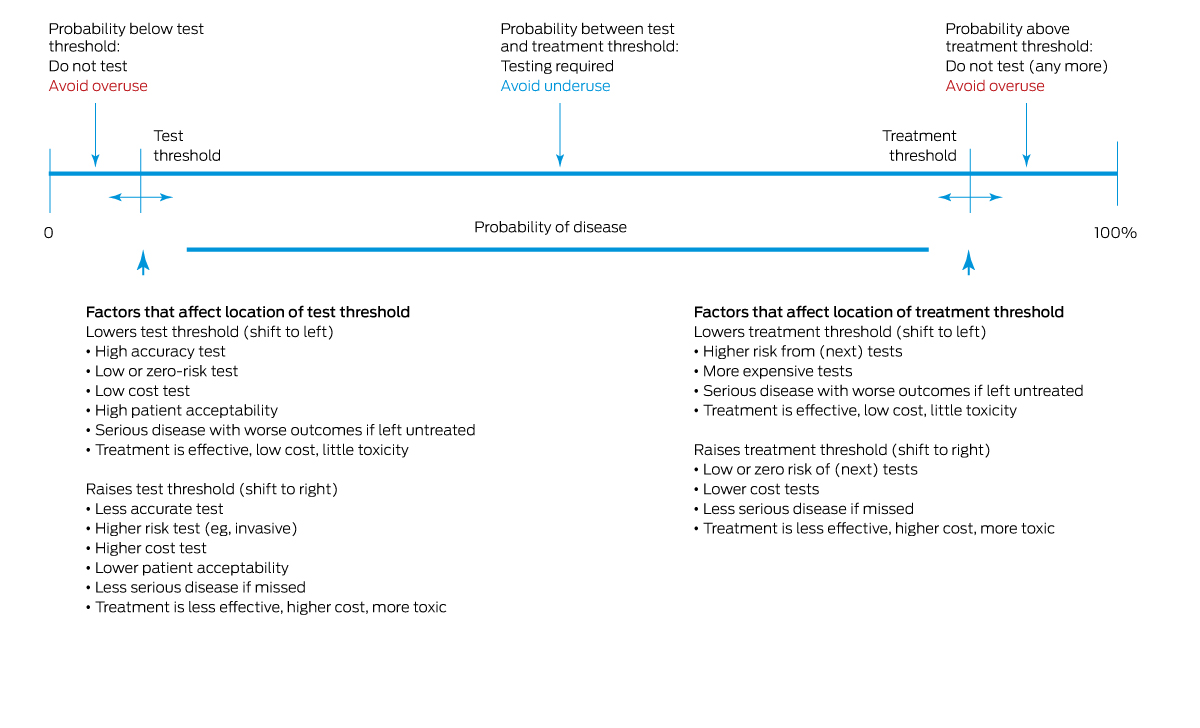

Test requests require consideration of pre‐test disease probability, test accuracy, and benefits and harms of downstream treatments, as well as the acuity and severity of the clinical condition. Tests may be inappropriate when disease probability is too low or too high for the test result to influence decision making. In particular, testing in individuals with very low disease probability will likely yield few true positive results, generate more false positives and do little to reassure patients or resolve their symptoms.14 Unfortunately, clinicians often overestimate pre‐test probability15 and do not adequately adjust down their estimates of post‐test disease probability according to test results, due to a misunderstanding of test sensitivity, specificity and predictive value.16 Misjudgements are even more likely when an unexpected test result challenges the practitioner's well founded clinical suspicion.17 Considering in advance how a test result will influence further management requires an understanding of where post‐test probabilities sit in regards to test or treatment decision thresholds (Box 2).18

The most useful tests are those for which results accurately and substantially increase or decrease disease probability, that is, which have high positive or negative predictive value respectively. These properties are not well understood; few tests are good at both, many tests make no difference to patient outcomes,19 and evaluation and regulation of new diagnostic tests are less rigorous compared with medications or devices.20 A better understanding of disease prevalence and test performance by clinicians and patients may increase appropriateness of test requests (Box 3).21,22 Educating clinicians in Bayesian logic and test characteristics using visual tools,23 employing calculators to adjust disease probability for risk factors based on test likelihood ratios,24 and applying computerised decision aids to guide probability estimates25 are other assistive strategies.

Avoiding misinterpretation of mildly abnormal or normal test results

Misinterpreting test results just above or below the normal reference range (NRR) as indicators of disease promotes overdiagnosis for several reasons.26 First, the quoted NRR may not apply to infants, very old patients, pregnant or post‐menopausal women, or those with certain genetic but benign states (eg, thalassaemia trait). Second, statistically about 5% of the general healthy population will have test results just outside the NRR. Third, the more tests requested, the greater the chance of a spuriously abnormal result in healthy individuals. Fourth, sampling or processing errors, dietary changes, certain medications, seasonal influences and physiological variability can all generate slightly abnormal but non‐pathological results.

In the absence of risk factors, clinical features or family history, mild elevations in erythrocyte sedimentation rate, C‐reactive protein, biochemical analytes, rheumatoid factor, auto‐antibody titres, thyroid‐stimulating hormone and tumour markers do not necessarily indicate disease.27 On the other hand, marked change in a “normal” result (eg, serum creatinine rising from low to high normal; serum ferritin decreasing from high to low normal) may suggest evolving disease. In all these scenarios, interpreting test results must account for clinical context and pre‐test probability.

Using diagnostic decision support tools

Validated diagnostic rules and pathways can guide choice and timing of test requests for specific clinical scenarios. For example, related to underuse, troponin assays combined with clinical risk scores in patients presenting to emergency departments with chest pain assist in ruling out acute coronary syndrome and avoiding unnecessary stress testing and hospitalisation.28 Related to overuse, using rule‐out algorithms and D‐dimer testing in suspected pulmonary thromboembolism limits unnecessary computed tomography pulmonary artery imaging and overinvestigation of incidental findings.29 Integrating decision rules into electronic medical records improves appropriate test ordering.30 Computerised tools can generate plausible differential diagnoses that promote more appropriate lines of investigation31 as well as context‐aware warnings of lurking diagnostic pitfalls, including test limitations,32 in uncertain or serious clinical scenarios. However, optimal use of these tools requires easy to use interfaces, seamless integration into clinical workflows, and compatibility with clinicians’ cognitive processing.

Ordering and sequencing tests appropriately

Excepting medical emergencies, selected tests relevant to the provisional diagnosis and to excluding plausible “must not miss” diagnoses of serious but treatable diseases should be requested first. Additional tests to investigate alternative diagnoses should wait unless the provisional diagnosis remains uncertain following initial test results, or clinical features change, or the patient fails to respond as expected to first line treatment. Requesting multiple tests to cover for several diagnostic possibilities at the time of initial contact should be avoided, unless a more acute, progressive illness warrants such actions in avoiding diagnostic delay. Consulting with pathologists and radiologists or evidence‐based guidelines can assist in optimising test selection.33

Responding appropriately to diagnostic uncertainty

Diagnostic uncertainty has been associated with a propensity to request more tests, resulting in either diagnostic delays (false reassurance by a false negative test result with no consideration of alternative diagnoses) or unnecessary anxiety and overinvestigation (false positive test result suggesting serious disease). An alternative approach in uncertain situations is to engage with patients, validate their experience and symptoms, solicit their major concerns, explain the most likely diagnosis, briefly outline plausible differential diagnoses, openly acknowledge uncertainty and the limitations of tests in eliminating it, provide a management plan, map the expected time course for improvement, and arrange follow‐up clinician review as required.34

Applying system‐level strategies for optimising the use of investigations

Various system‐level strategies may also help optimise test use: changes to order entry rules within electronic medical records that guide clinicians towards more appropriate requests, education on test indications and costs, audit and feedback on test utilisation, and organisational policy changes.35 None of these strategies have incurred more missed diagnoses or related adverse events.35

Conclusion

Finding the sweet spot between underuse and overuse of diagnostic tests is essential for enhancing appropriate use of investigations and improving patient wellbeing. It can be achieved if the strategies outlined here are applied consistently in routine clinical practice.

Box 3 – Probabilistic approach to diagnosis

|

Knowledge and concepts |

Methods to improve clinician and patient understanding |

||||||||||||||

|

|

|||||||||||||||

|

Practise shared decision making |

|

||||||||||||||

|

Know prevalence of specific diseases in the target population in estimating pre‐test probability |

|

||||||||||||||

|

Know implications of history and examination for adjusting pre‐test probability |

|

||||||||||||||

|

Understand potential benefits and harms of tests |

|

||||||||||||||

|

Understand test sensitivity (and correlate of false negatives) and specificity (and correlate of false positives) |

|

||||||||||||||

|

Understand and use test likelihood ratios |

|

||||||||||||||

|

Understand which tests are useful in making a specific diagnosis highly likely or highly unlikely |

|

||||||||||||||

|

Understand test and treatment thresholds |

|

||||||||||||||

|

|

|||||||||||||||

|

|

|||||||||||||||

Provenance: Not commissioned; externally peer reviewed.

- 1. Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician‐reported errors. Arch Intern Med 2009; 169: 1881‐1887.

- 2. Welch HG, Schwartz L, Woloshin S. Overdiagnosed: making people sick in the pursuit of health. Boston (MA): Beacon Press, 2011.

- 3. O'Sullivan JW, Albasri A, Nicholson BD, et al. Overtesting and undertesting in primary care: a systematic review and meta‐analysis. BMJ Open 2018; 8: e018557.

- 4. Zhi M, Ding EL, Theisen‐Toupal J, et al. The landscape of inappropriate laboratory testing: a 15‐year meta‐analysis. PLoS One 2013; 8: e78962.

- 5. van der Weijden T, van Bokhoven MA, Dinant G‐J, et al. Understanding laboratory testing in diagnostic uncertainty: a qualitative study in general practice. Br J Gen Pract 2002; 52: 974‐980.

- 6. Korenstein, D, Scherer LD, Foy A, et al. Clinician attitudes and beliefs associated with more aggressive diagnostic testing. Am J Med 2022; 135: e182‐e193.

- 7. Verghese A, Charlton B, Kassirer JP, et al. Inadequacies of physical examination as a cause of medical errors and adverse events: A collection of vignettes. Am J Med 2015; 128: 1322‐1324.

- 8. Watson J, de Salis I, Banks J, Salisbury C. What do tests do for doctors? A qualitative study of blood testing in UK primary care. Fam Pract 2017; 34: 735‐739.

- 9. Al Qahtani DA, Rotgans JI, Mamede S, et al. Factors underlying suboptimal diagnostic performance in physicians under time pressure. Med Educ 2018; 52: 1288‐1298.

- 10. Hill JR, Visweswaran S, Ning X, Schleyer TTK. Use, impact, weaknesses, and advanced features of search functions for clinical use in electronic health records: a scoping review. Appl Clin Inform 2021; 12: 417‐428.

- 11. Kroenke K. A practical and evidence‐based approach to common symptoms: a narrative review. Ann Intern Med 2014; 161: 579‐586.

- 12. McDonald IG, Daly J, Jelinek VM, et al. Opening Pandora's box: the unpredictability of reassurance by a normal test result. BMJ 1996; 313: 329‐332.

- 13. Polaris JJZ, Katz JN. “Appropriate” diagnostic testing: supporting diagnostics with evidence‐based medicine and shared decision making. BMC Res Notes 2014; 7: 922.

- 14. Rolfe A, Burton C. Reassurance after diagnostic testing with a low pretest probability of serious disease: systematic review and meta‐analysis. JAMA Intern Med 2013; 173: 407‐416.

- 15. Morgan DJ, Pineles L, Owczarzak J, et al. Accuracy of practitioner estimates of probability of diagnosis before and after testing. JAMA Intern Med 2021; 181: 747‐755.

- 16. Whiting PF, Davenport C, Jameson C, et al. How well do health professionals interpret diagnostic information? A systematic review. BMJ Open 2015; 5: e008155.

- 17. Bianchi MT, Alexander BM, Cash SS. Incorporating uncertainty into medical decision making: An approach to unexpected test results. Med Decis Making 2009; 29: 116‐124.

- 18. Djulbegovic B, van den Ende J, Hamm RM, et al; International Threshold Working Group (ITWG). When is it rational to order a diagnostic test, or prescribe treatment: the threshold model as an explanation of practice variation. Eur J Clin Invest 2015; 45: 485‐493.

- 19. Siontis KC, Siontis GCM, Contopoulos‐Ioannidis DG, Ioannidis JPA. Diagnostic tests often fail to lead to changes in patient outcomes. J Clin Epidemiol 2014; 67; 612‐621.

- 20. Korevaar DA, Wang J, van Enst WA, et al. Reporting diagnostic accuracy studies: some improvements after 10 years of STARD. Radiology 2015; 274: 781‐789.

- 21. Stern SDC, Cifu AS, Altkorn D. Symptom to diagnosis: an evidence‐based guide, 3rd ed. Chapter 1: diagnostic process. New York (NY): McGraw‐Hill, 2015.

- 22. Slater TA, Wadund AA, Ahmed N. Pocketbook of differential diagnosis, 5th ed. London: Elsevier, 2021.

- 23. Fanshawe TR, Power M, Graziadio S, et al. Interactive visualisation for interpreting diagnostic test accuracy study results. BMJ Evidence‐Based Med 2018; 23: 13‐16.

- 24. Genders TSS, Steyerberg EW, Hunink MGM, et al. Prediction model to estimate presence of coronary artery disease: retrospective pooled analysis of existing cohorts. BMJ 2012; 344: e3485.

- 25. Testing Wisely. Disease risk calculator. https://calculator.testingwisely.com/ (viewed Mar 2023).

- 26. Phillips P. Pitfalls in interpreting laboratory results. Aust Prescr 2009; 32: 43‐46.

- 27. Bray C, Bell LN, Liang H, et al. Erythrocyte sedimentation rate and C‐reactive protein measurements and their relevance in clinical medicine. West Med J 2016; 115: 317‐321.

- 28. Baugh CW, Greenberg JO, Mahler SA, et al. Implementation of a risk stratification and management pathway for acute chest pain in the emergency department. Crit Pathw Cardiol 2016; 15: 131‐137.

- 29. Perera M, Aggarwal L, Scott IA, Cocks N. Underuse of risk assessment and overuse of computed tomography pulmonary angiography in patients with suspected pulmonary thromboembolism. Intern Med J 2017; 47: 1154‐1160.

- 30. Redinger K, Rozin E, Schiller T, et al. The impact of pop‐up clinical electronic health record decision tools on ordering pulmonary embolism studies in the emergency department. J Emerg Med 2022; 62: 103‐108.

- 31. Riches N, Panagioti M, Alam R, et al. The effectiveness of electronic differential diagnoses (DDX) generators: a systematic review and meta‐analysis. PLoS One 2016; 11: e0148991.

- 32. Singh H, Giardina TD, Petersen LA, et al. Exploring situational awareness in diagnostic errors in primary care. BMJ Qual Saf 2012; 21: 30‐38.

- 33. Kratz A, Laposata M. Enhanced clinical consulting — moving toward the core competencies of laboratory professionals. Clin Chim Acta 2002; 319: 117‐125.

- 34. Khazen M, Mirica M, Carlile N, et al. Developing a framework and electronic tool for communicating diagnostic uncertainty in primary care: a qualitative study. JAMA Netw Open 2023; 6: e232218.

- 35. Rubinstein M, Hirsch R, Bandyopadhyay K, et al. Effectiveness of practices to support appropriate laboratory test utilization: a laboratory medicine best practices systematic review and meta‐analysis. Am J Clin Pathol 2018; 149: 197‐221.

Open access:

Open access publishing facilitated by The University of Queensland, as part of the Wiley ‐ The University of Queensland agreement via the Council of Australian University Librarians.

No relevant disclosures.