The known: Entrustable professional activities (EPAs) have a strong theoretical basis, and can be employed to assess the quality of care provided by medical trainees.

The new: Entrustment levels for both clinical and non‐clinical tasks were higher for senior than for junior trainees, levels of entrustment for junior trainees increased over 9 months of training, and the concordance of self‐assessment of entrustment level with supervisor assessments was greater for senior trainees than junior trainees.

The implications: EPAs are valuable tools for assessing the performance of general practice trainees in providing care for patients during their community workplace‐based training.

Entrustable professional activities (EPAs) were developed in response to the shift in medical education toward competency‐based assessment.1,2 Scientific knowledge has been the centre of medical training since the 1910 Flexner report on medical education in North America,3 and the dominant aim has subsequently been to integrate scientific practice and findings into the curricula of university medical schools. Toward the end of the 20th century, a change in thinking required doctors to possess not only technical expertise and medical knowledge, but also the ability to work effectively in teams and to navigate complex health care systems.4 This shift has been complemented in a variety of contexts by developing competency‐based frameworks for training and assessing medical students5 and by replacing traditional final and barrier testing with longitudinal and programmatic assessment.6

One of the challenges of competency‐based medical education has been assessing the competence of trainees in busy clinical settings. Competence cannot be observed directly; it must be inferred from what trainees say and do, creating a gap between the competencies to be assessed and the daily tasks of doctors.4 EPAs were designed as a practical response to this problem.7 An EPA is a task or unit of professional practice that can be fully entrusted to a trainee as soon as they have shown they can perform it unsupervised.8 Whereas competencies are characteristics of individuals, EPAs are trainee activities observable in the workplace,9 reflecting decision making by supervisors in normal clinical practice.10

Clinical supervisors entrust clinical tasks to trainees several times a day in almost every clinical setting, as they are generally not in the same room as the trainee.11 Trust requires interdependence between the truster (supervisor or patient) and the trustee (trainee) and creates supervisor vulnerability, as the supervisor is responsible for the quality of care delivered by the trainee.11 Entrustment decisions must reconcile the educational need to push learners to extend their scope of performance with the obligation to ensure safe, high quality care. Making entrustment decisions explicit can lead to more effective assessments and firmly return the patient to the centre of educational decisions.10

It has been reported that entrustment decisions which mimic everyday decision making by supervisors in clinical practice have positive effects on the psychometric qualities of workplace‐based assessment. For instance, in an examination of entrustment decision making by supervisors of anaesthesia registrars in Australia and New Zealand, a conventional mini‐clinical evaluation exercise (mini‐CEX) scoring system was modified to include an entrustment scale on which supervisors rated trainees’ levels of independence as “supervisor required in the theatre suite”, “supervisor required in the hospital”, or “supervisor not required”. The authors found that a reliability coefficient of 0.70 was achieved with only nine assessments per registrar when the scale was used, but was not reached even after 50 assessments with the conventional scoring system. Further, the modified scoring system identified more trainees performing below expectations.12

While the rationale for using EPAs is clear, testing of their construct validity has been limited,9 although some international and hospital‐based studies have found marked educational benefits.13,14,15 Construct validation is an extensive process, but its central component is testing whether scores reflect expectations about the construct the test purports to measure.16

Employing EPAs in a diverse Australian community setting, such as general practice training, has not been examined. We therefore assessed the construct validity of assessment with EPAs in a postgraduate training environment by determining the sensitivity of EPA assessment processes for assessing competency development in trainees. Prior to our study, there had been no EPAs for assessing general practice trainees in Australia, although EPAs had been developed for psychiatry, surgical, adult internal, and paediatric medicine training,17,18,19,20 and in North America for family and palliative medicine training.9,21,22 We tested three hypotheses:

- Entrustment levels for junior trainees increase over time;

- Entrustment levels for senior trainees are higher than those for junior trainees; and

- Self‐assessment of entrustment levels by senior trainees more closely matches supervisor assessment than self‐assessment by junior trainees.

Methods

Study cohort

Australian general practice training includes one year of hospital‐based training followed by at least 2 years’ community‐based training for urban trainees, or 3 years for rural trainees. EPA assessments replaced mid‐ and end semester trainee assessments for general practice trainees in South Australia from January 2017. We analysed data from 2017 for all general practice trainees in South Australia. Hospital‐based trainees were not included. Trainees were defined as junior trainees (first 12 months of community‐based training) or senior trainees (more than 12 months’ experience).

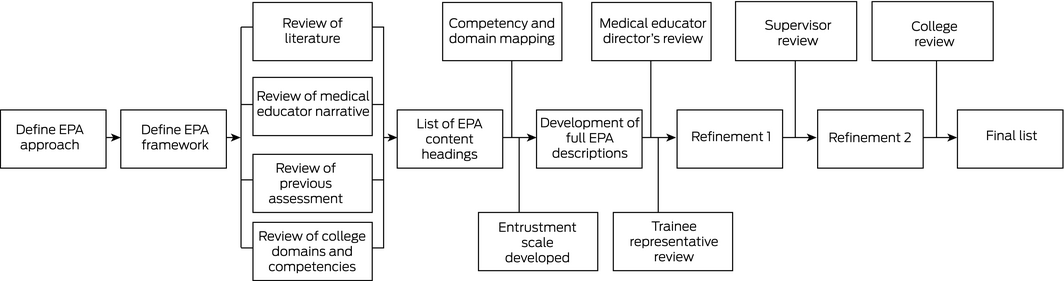

Developing the entrustable professional activities

Published literature and guidelines informed the development of the 13 EPAs in the process summarised in Box 1.23 Overall competence comprises a number of specific competencies applied repeatedly and contemporaneously.24,25 By limiting the number of EPAs to 13 that encompassed broad responsibilities, we ensured their use would be practicable for supervisors and trainees, while still providing opportunities for specific feedback.8,24 EPA content headings were developed after a comprehensive review of the relevant literature and of domains and competencies of the curricula of the Royal Australian College of General Practitioners26 and the Australian College of Rural and Remote Medicine.27 Throughout the development process, key stakeholders, including supervisors, trainees, medical educators and college representatives, were consulted to ensure that published standards for content validation were met.23

Prior to introducing EPA assessment of trainees, a systematic change management approach was adopted to ensure engagement with the process. Short videos on the EPA concept were presented at education events for supervisors and placed online, and personalised communications with key messages advocating the change were forwarded to supervisors.

Data collection

Entrustment levels for the 13 EPAs were assessed by supervisors and by trainee self‐assessment at 3‐month intervals (May, July, October, January) during 2017–18. EPAs 1–8 (and for rural trainees only: EPA 13) were clinical in nature, EPAs 9–12 were non‐clinical (Box 2, Box 3). Assessments were uploaded to the usual online learning management system, and data on entrustment level were extracted from the system for analysis. The entire dataset was used for each analysis, except that data for trainees were excluded when any data for a particular analysis were missing.

Statistical analysis

Statistical analyses were conducted in Prism (GraphPad). For each EPA, the frequency distributions of levels of entrustment (1–4) at 3 for junior and senior trainees (scored by supervisors) were compared in Mann–Whitney U tests; after Bonferroni correction for multiple comparisons, P < 0.004 (one‐tailed) was deemed statistically significant. The ratio of the proportions of senior and junior trainees entrusted by supervisors with unsupervised practice at 3 months was calculated, with 95% confidence intervals (CIs) estimated with the Koopman asymptotic method.

Changes over 9 months in the proportions of EPAs junior trainees entrusted with unsupervised practice, separately for pooled clinical and non‐clinical EPAs, are expressed as ratios with respect to the 3‐month levels (with 95% CIs estimated with the Koopman asymptotic method).

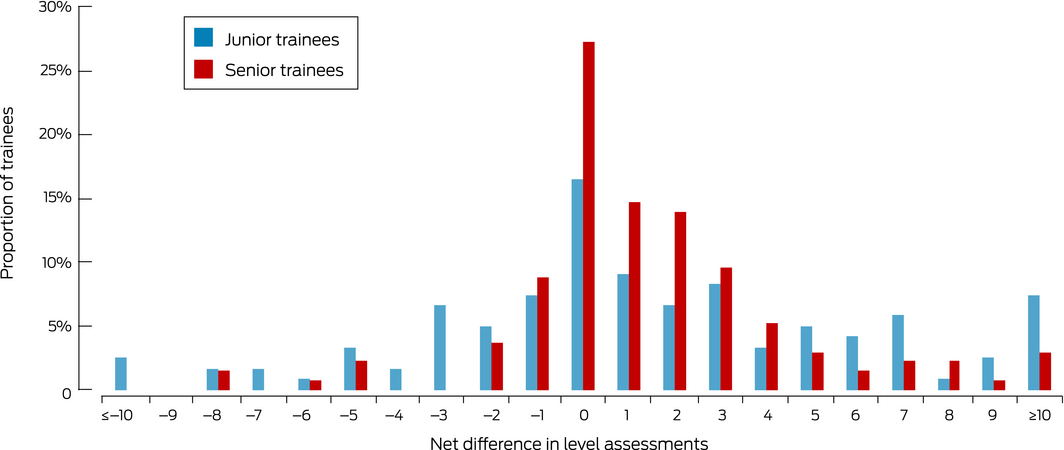

To assess concordance of assessments of levels of entrustment by supervisors and trainees, the differences for each EPA were summed to obtain a net difference for each trainee (0 = trainee and supervisor overall scores agreed; negative difference: higher net score by trainee; positive difference: higher net score by supervisor). The spread of net differences was visualised in a frequency chart, and compared for junior and senior trainees in an independent samples t test.

Ethics approval

Ethics approval for the study was granted by the Flinders University Human Research Ethics Committee (reference, 7882).

Results

Demographic information

A total of 283 trainees completed at least one EPA assessment in 2017, including 168 urban and 115 rural trainees; 130 were junior trainees (48 men, 82 women), 153 were senior trainees (71 men, 82 women). Data were missing for seven trainees (2.5%) at 3 months.

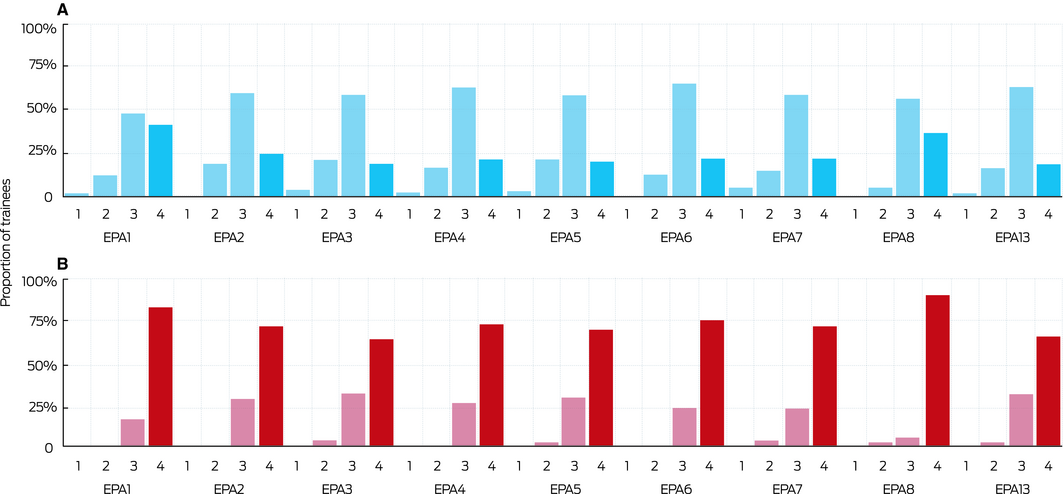

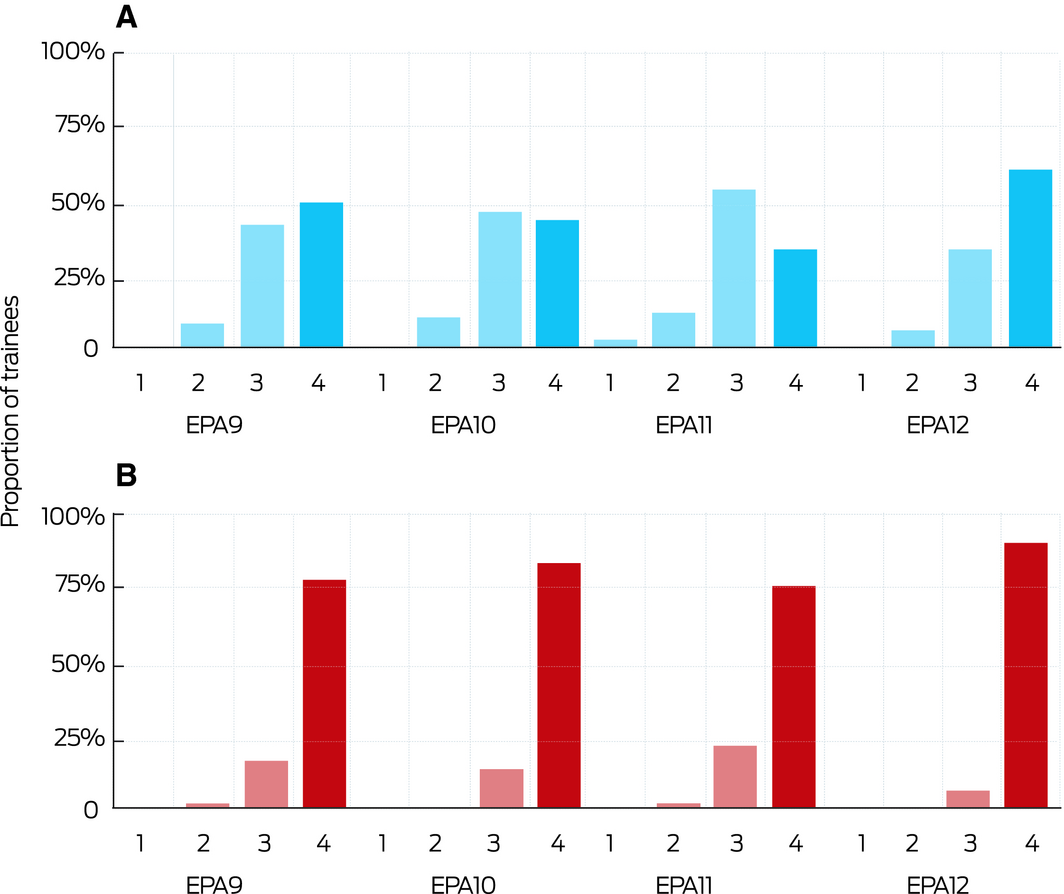

Levels of entrustment: junior v senior trainees

The number of trainees entrusted with performing individual clinical EPAs without supervision at 3 months ranged from 24 (19%; EPAs 3 and 5) to 53 (41%; EPA 1) for junior trainees, and from 94 (64%; EPA 3) to 136 (93%; EPA 8) for senior trainees (Box 4). Senior trainees were 2.1 (95% CI, 1.66–2.58; EPA 1) to 3.7 times (95% CI, 2.60–5.28; EPA 4) as likely as junior trainees to be entrusted with performing clinical EPAs without supervision (Box 5). The differences between junior and senior trainees with regard to non‐clinical EPAs were also statistically significant (Box 5, Box 6).

Change in proportion of junior trainees judged able to practise unsupervised

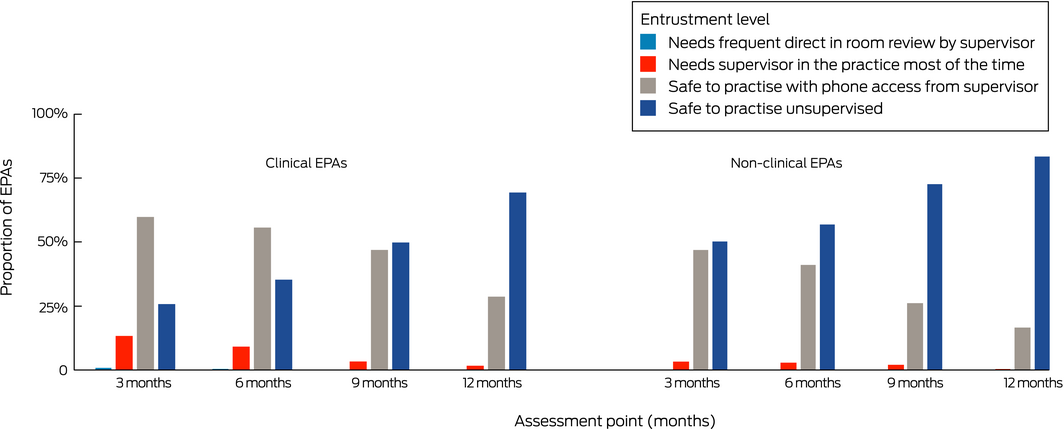

Complete data for all four assessments were available for 70 junior trainees. The proportion of clinical EPAs with which these trainees were entrusted to perform unsupervised increased from 26% at 3 months to 35% at 6 months (rate ratio [RR], 1.37; 95% CI; 1.15–1.63), to 50% at 9 months (RR, 1.92; 95% CI, 1.64–2.26), and 69% at 12 months (RR, 2.68; 95% CI; 2.32–3.12). For non‐clinical EPAs, the proportion increased from 50% at 3 months to 56% at 6 months (RR, 1.13; 95% CI, 0.97–1.32), 72% at 9 months (RR, 1.44; 95% CI, 1.26–1.66), and 83% at 12 months (RR, 1.66; 95% CI, 1.47–1.90) (Box 7).

Concordance of supervisor and trainee assessments of ability to practise unsupervised

Self‐assessments of entrustment level at 3 months were available for 121 junior and 136 senior trainees. The mean differences in overall entrustment ratings between supervisor and trainee were 5.5 points (SD, 6.6) for junior trainees and 2.9 points (SD, 2.8) for senior trainees (P < 0.001) (Box 8).

Discussion

Our results confirmed all three hypotheses: entrustment levels for both clinical and non‐clinical tasks were higher for senior than for junior trainees; levels of entrustment for junior trainees increased over 12 months of training; and concordance of supervisor assessments with self‐assessments was greater for senior trainees than junior trainees.

The goal of medical education is to teach trainees how to provide safe professional care.10 In our study, the trust of supervisors in the competence of their trainees increased with time; for each EPA, at least twice as many senior as junior trainees were entrusted with performing tasks independently. Further, junior trainees’ entrustment levels increased fourfold over 9 months in their supervised training environment. Balanced, distant supervision that monitors safe practice while also providing trainees an authentic experience of responsibility is essential for learning to practise independently.10 From a risk management perspective, EPA assessments provide a method for maintaining the safety of health care while enhancing the training of the future health care workforce.

The closer concordance of self‐assessments by senior trainees than self‐assessments by junior trainees with those of their respective supervisors suggests that trainees were initially not aware of what they did or did not know. As trainees develop expertise, unconscious gaps in competency awareness close. Self‐regulated learning, including evaluation of one's own learning, is essential for becoming a safe practitioner.28 Identifying overconfident trainees, so that support and learning strategies can be instigated, is also an important aspect of workplace‐based assessment.

Despite differing contexts, our results are similar to those of other studies. For example, entrustment levels for paediatric medicine residents in an American study increased across each year of training and from year to year.13 Similarly, a study of medical students in Canada noted that entrustment scores increased over time during a surgical rotation.14 A study of general surgical residents found that residents scored their global EPA performance higher than faculty physicians, but their operative EPA performance ratings were similar.15 Each of these studies was undertaken in the slightly more controlled educational setting of hospitals; our study found that their findings were replicable in less structured community‐based settings.

With increasing evidence for the utility of EPAs, it is likely that postgraduate governing bodies will include EPAs as an integral part of trainee assessment. However, it is important that EPAs are carefully designed for their intended context rather than simply extrapolated from one environment to another.5,29

Our results indicate that EPAs are a robust assessment tool in workplace‐based training in a carefully designed setting. Although we did not seek to assess how EPA assessment achieved its aims, key factors probably included significant engagement by stakeholders (supervisors, medical educators, trainees, college representatives) during the development process, which focused on content validation to ensure that the EPAs were aligned with the core activities of general practice.8 Further, construct validity was enhanced by reviewing EPA content during the development process.23 Thirdly, EPAs were acceptable to trainees and supervisors because they reflected activities of daily clinical practice, they were described in normal clinical language that was clear for trainees and supervisors, and they allowed them to express feelings of trustworthiness.9,23 Finally, we intentionally applied co‐design principles to facilitate the change management process.

Limitations

As is often the case in education research,29 the generalisability of our findings is limited, as we examined a specific set of EPAs in a single postgraduate setting. Anchoring bias (that is, an assessment being influenced by an initial piece of information) may have resulted from the supervisors knowing the level of training of trainees. We attempted to minimise anchoring by ensuring that supervisors did not have easy access to earlier EPA ratings during the 6‐, 9‐ and 12‐month assessments. As we preferred ecological validity to being able to draw causal conclusions, we did not include a control group in our study. The differences we found were of a magnitude rarely seen in naturalistic field studies, in which ecological validity is more important than optimising the comparability of groups and isolating individual factors.

Conclusion

In addition to having a strong theoretical basis, EPAs are useful practical assessment tools in a workplace‐based training environment, even in less structured educational settings than, for example, hospitals. Establishing how best to adapt our findings to other settings and how supervisors make decisions about entrustment levels for clinical and non‐clinical EPAs should be further investigated. Our study will help advance the efficiency and effectivity of EPAs as assessment tools. Given the importance of patient safety in medical education and of the trust relationship between trainees and patients, the role of the patient in entrustment decisions should also be examined.

Box 1 – Process for developing entrustable professional activities (EPAs) for the assessment of general practice trainees

Box 2 – Content headings of the end‐of‐training entrustable professional activities (EPAs)

- Take a comprehensive history and perform an examination of all patients

- Identify common working diagnoses and prioritise a list of differential diagnoses

- Manage the care of patients with common acute symptoms and diseases in several care settings

- Manage the care of patients with common chronic diseases and multiple morbid conditions

- Manage sex‐related health problems

- Manage mental health problems

- Manage the care of children and adolescents

- Follow screening protocols and primary prevention guidelines

- Lead and work in professional teams

- Communicate effectively and develop partnerships with patients, carers and families

- Demonstrate time management and practice management skills

- Demonstrate attributes expected of a general practitioner

- Rural trainees only: demonstrate an ability to manage emergency on‐call and inpatient care

Box 3 – Definitions of entrustment levels

- Needs frequent direct in‐room review by supervisor

- Needs onsite supervisor in the practice most of the time

- Safe to practise with phone contact with supervisor

- Safe to practise unsupervised

Box 4 – Supervisor‐determined levels of entrustment* for junior (A) and senior trainees (B) for clinical entrustable professional activities (EPAs) at 3‐month assessment

* 1. Needs frequent direct in‐room review by supervisor. 2. Needs onsite supervisor in the practice most of the time. 3. Safe to practise with phone contact with supervisor. 4. Safe to practise unsupervised. For each EPA, the distribution of entrustment levels for junior and senior trainees was significantly different (Mann–Whitney, P < 0.001). ◆

Box 5 – Ratio of the proportions of senior and junior trainees scored as being safe to practise unsupervised by supervisors at 3‐month assessment

|

|

Junior trainees |

Senior trainees |

Rate ratio (95% CI) |

||||||||||||

|

|

|||||||||||||||

|

Clinical entrustable professional activities |

|||||||||||||||

|

EPA 1 |

53/130 (41%) |

123/147 (84%) |

2.05 (1.67–2.58) |

||||||||||||

|

EPA 2 |

31/130 (24%) |

105/147 (71%) |

3.00 (1.20–4.18) |

||||||||||||

|

EPA 3 |

24/129 (19%) |

94/146 (64%) |

3.46 (2.40–5.10) |

||||||||||||

|

EPA 4 |

26/129 (20%) |

107/145 (74%) |

3.66 (2.60–5.28) |

||||||||||||

|

EPA 5 |

25/129 (19%) |

102/146 (70%) |

3.60 (2.51–4.93) |

||||||||||||

|

EPA 6 |

28/130 (22%) |

110/147 (75%) |

3.47 (2.51–4.93) |

||||||||||||

|

EPA 7 |

28/129 (22%) |

105/147 (71%) |

3.29 (2.37–4.68) |

||||||||||||

|

EPA 8 |

47/129 (36%) |

136/147 (93%) |

2.54 (2.04–3.24) |

||||||||||||

|

EPA 13 |

9/49 (18%) |

45/67 (67%) |

3.66 (2.07–6.86) |

||||||||||||

|

Non‐clinical entrustable professional activities |

|||||||||||||||

|

EPA 9 |

65/130 (50%) |

120/147 (82%) |

1.63 (1.36–1.99) |

||||||||||||

|

EPA 10 |

56/129 (43%) |

128/147 (87%) |

2.01 (1.65–2.50) |

||||||||||||

|

EPA 11 |

43/128 (34%) |

115/148 (78%) |

2.31 (1.81–3.03) |

||||||||||||

|

EPA 12 |

79/129 (37%) |

137/146 (63%) |

1.53 (1.34–1.79) |

||||||||||||

|

|

|||||||||||||||

|

CI = confidence interval. ◆ |

|||||||||||||||

Box 6 – Supervisor‐determined levels of entrustment for junior (A) and senior trainees (B) for clinical entrustable professional activities (EPAs) at 3‐month assessment

For each EPA, the distribution of entrustment levels (1–4; Box 3) for junior and senior trainees was significantly different (Mann–Whitney, P < 0.001). ◆

Box 7 – Entrustment levels for 70 junior trainees during first 12 months of training, by clinical and non‐clinical entrustable professional activities (EPAs)*

* Depicted are the proportions of clinical EPAs junior trainees were trusted to perform at the four entrustment levels. Total number of clinical EPAs per assessment point, 584–588; of non‐clinical EPAs, 276–280. Complete data for all four assessments were available only for 70 trainees. ◆

Received 27 July 2018, accepted 31 January 2019

- Nyoli Valentine1

- Jon Wignes1

- Jill Benson1

- Stephanie Clota1

- Lambert WT Schuwirth2,3

- 1 ModMed Institute of Health Professions Education, Adelaide, SA

- 2 Prideaux Centre for Research in Health Professions Education, Flinders University, Adelaide, SA

- 3 Educational Development and Research, Maastricht University, Maastricht, The Netherlands

We acknowledge GPEx for their collaboration in this research.

No relevant disclosures.

- 1. ten Cate O. Entrustability of professional activities and competency‐based training. Med Educ 2005; 39: 1176–1177.

- 2. ten Cate O, Scheele F. Competency‐based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med 2007; 82: 542–547.

- 3. Frenk J, Chen L, Bhutta ZA, et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet 2010; 376: 1923–1958.

- 4. ten Cate O, Tobin S, Stokes ML. Bringing competencies closer to day‐to‐day clinical work through entrustable professional activities. Med J Aust. 2017; 206: 14–16. https://www.mja.com.au/journal/2017/206/1/bringing-competencies-closer-day-day-clinical-work-through-entrustable

- 5. CanMEDS 2000: extract from the CanMEDS 2000 Project Societal Needs Working Group report. Med Teach 2000; 22: 549–554.

- 6. van der Vleuten C, Lindemann I, Schmidt L. Programmatic assessment: the process, rationale and evidence for modern evaluation approaches in medical education. Med J Aust 2018; 209: 386–388. https://www.mja.com.au/journal/2018/209/9/programmatic-assessment-process-rationale-and-evidence-modern-evaluation

- 7. Chen HC, van den Broek WE, ten Cate O. The case for use of entrustable professional activities in undergraduate medical education. Acad Med 2015; 90: 431–436.

- 8. ten Cate O, Chen HC, Hoff RG, et al. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach 2015; 37: 983–1002.

- 9. Schultz K, Griffiths J, Lacasse M. The application of entrustable professional activities to inform competency decisions in a family medicine residency program. Acad Med 2015; 90: 888–897.

- 10. ten Cate O, Hart D, Ankel F, et al. Entrustment decision making in clinical training. Acad Med 2016; 91: 191–198.

- 11. ten Cate O. Trust, competence, and the supervisor's role in postgraduate training. BMJ 2006; 333: 748–751.

- 12. Weller JM, Misur M, Nicolson S, et al. Can I leave the theatre? A key to more reliable workplace‐based assessment. Br J Anaesth 2014; 112: 1083–1091.

- 13. Mink RB, Schwartz A, Herman BE, et al. Validity of level of supervision scales for assessing pediatric fellows on the common pediatric subspecialty entrustable professional activities. Acad Med 2018; 93: 283–291.

- 14. Curran VR, Deacon D, Schulz H, et al. Evaluation of the characteristics of a workplace assessment form to assess entrustable professional activities (EPAs) in an undergraduate surgery core clerkship. J Surg Educ 2018; 75: 1211–1222.

- 15. Wagner JP, Lewis CE, Tillou A, et al. Use of entrustable professional activities in the assessment of surgical resident competency. JAMA Surg 2018; 153: 335–343.

- 16. Cronbach LJ, Meehl PE. Construct validity in psychological tests. Psychol Bull 1955; 52: 281–302.

- 17. Boyce P, Spratt C, Davies M, McEvoy P. Using entrustable professional activities to guide curriculum development in psychiatry training. BMC Med Educ 2011; 11: 96.

- 18. Royal Australian College of Physicians. Basic training: entrustable professional activities (EPA). Aug 2017. https://www.racp.edu.au/docs/default-source/default-document-library/entrustable-professional-activities-for-basic-trainees-in-adult-internal-medicine-and-paediatrics-child-health.pdf?sfvrsn=16dc0d1a_4 (viewed Dec 2018).

- 19. Moore D, Young CJ, Hong J. Implementing entrustable professional activities: the yellow brick road towards competency‐based training? ANZ J Surg 2017; 87: 1001–1005.

- 20. Royal Australian and New Zealand College of Psychiatrists. Entrustable professional activities (EPAs). 2012. https://www.ranzcp.org/pre-fellowship/assessments-workplace/epas (viewed Mar 2019).

- 21. Shaughnessy AF, Sparks J, Cohen‐Osher M, et al. Entrustable professional activities in family medicine. J Grad Med Educ 2013; 5: 112–118.

- 22. Myers J, Krueger P, Webster F, et al. Development and validation of a set of palliative medicine entrustable professional activities: findings from a mixed methods study. J Palliat Med 2015; 18: 682–690.

- 23. Chen HC, McNamara M, Teherani A, et al. Developing entrustable professional activities for entry into clerkship. Acad Med 2016; 91: 247–255.

- 24. ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ 2013; 5: 157–158.

- 25. Harden RM, Crosby JR, Davis MH, Friedman M. AMEE Guide No. 14: Outcome‐based education, part 5. From competency to meta‐competency: a model for the specification of learning outcomes. Med Teach 1999; 21: 546–552.

- 26. Royal Australian College of General Practitioners. Competency profile of the Australian general practitioner at the point of fellowship. Dec 2015. https://www.racgp.org.au/download/Documents/VocationalTrain/Competency-Profile.pdf (viewed Mar 2019).

- 27. Australian College of Rural and Remote Medicine. Primary curriculum. Fourth edition. Feb 2013. http://www.acrrm.org.au/docs/default-source/documents/training-towards-fellowship/primary-curriculum-august-2016.pdf?sfvrsn=0 (viewed Mar 2019).

- 28. Pintrich P. A conceptual framework for assessing motivation and self‐regulated learning in college students. Educ Psychol Rev 2004; 16: 385–407.

- 29. Schuwirth LW, Durning SJ. Educational research: current trends, evidence base and unanswered questions. Med J Aust 2018; 208: 161–163. https://www.mja.com.au/journal/2018/208/4/educational-research-current-trends-evidence-base-and-unanswered-questions

Abstract

Objective: To assess whether entrustment levels for junior trainees with respect to entrustable professional activities (EPAs) increase over time; whether entrustment levels for senior trainees are higher than for junior trainees; and whether self‐assessment of entrustment levels by senior trainees more closely matches supervisor assessment than self‐assessment by junior trainees.

Design, setting, participants: Observational study of 130 junior and 153 senior community‐based general practice trainees in South Australia, 2017.

Main outcome measures: Differences in entrustment levels between junior and senior trainees; change in entrustment levels for junior trainees over 9 months; concordance of supervisor and trainee assessment of entrustment level over 9 months.

Results: Senior trainees were 2.1 (95% CI, 1.66–2.58) to 3.7 times (95% CI, 2.60–5.28) as likely as junior trainees to be entrusted with performing clinical EPAs without supervision. The proportion of EPAs with which junior trainees were entrusted to perform unsupervised increased from 26% at 3 months to 35% at 6 months (rate ratio [RR], 1.37; 95% CI; 1.15–1.63), to 50% at 9 months (RR, 1.92; 95% CI, 1.64–2.26), and 69% at 12 months (RR, 2.68; 95% CI; 2.32–3.12). At 3 months, the mean differences in entrustment ratings between supervisors and trainees was 5.5 points (SD, 6.6 points) for junior trainees and 2.93 points (SD, 2.8 points) for senior trainees (P < 0.001).

Conclusions: EPAs are valid assessment tools in a workplace‐based training environment.