Decision support tools driven by artificial intelligence are a new clinical method that clinicians need to embrace

The practice of medicine is changing rapidly, in line with society, where our lives are now driven by the digital revolution. Health has lagged behind, in part because it remains focused on human interactions. While digital interactions with banks are now the norm, most aspects of health still require human interaction. Recently The Lancet opined: “A scenario in which medical information, gathered at the point of care, is analysed using sophisticated machine algorithms to provide real‐time actionable analytics, seems to be within touching distance”. This was closely followed by “Despite the excitement around these sophisticated [artificial intelligence] technologies, very few are in clinical use”.1 In this article, we describe such an algorithm that was deployed in Australia in 2018, with commentary on issues that were once theoretical but now need to be considered in detail.

Building on a previous program,2 several primary health networks (PHNs) across Victoria and Sydney have made available their pooled, de‐identified primary care data for collaborative research. With recent expansion, Outcome Health's POLAR (Population Level Analysis and Reporting tool) Data Space will have information from more than 500 practices. The Victorian PHNs involved are piloting a predictive tool to assist general practitioners with determining a patient's individual risk of attending an emergency department (ED).

Risk of ED attendance is a long‐standing problem. Reduction of avoidable hospital admissions is key to improving quality of life of patients and effectively managing expensive hospital resources. As reducing avoidable hospitalisations is one of the goals of PHN activities, targeting risk determination is a priority for the PHNs involved in the predictive tool pilot.

Numerous predictive algorithms and models have been developed overseas (mostly using linear regression models) with the aim of identifying risk of emergency presentation, admission and re‐admission among patients.3,4,5,6 These tools have had varying success but have often struggled to show good sensitivity and recall over broader groups. In addition, they generally do not offer effective information to inform GPs during their consultations with patients.

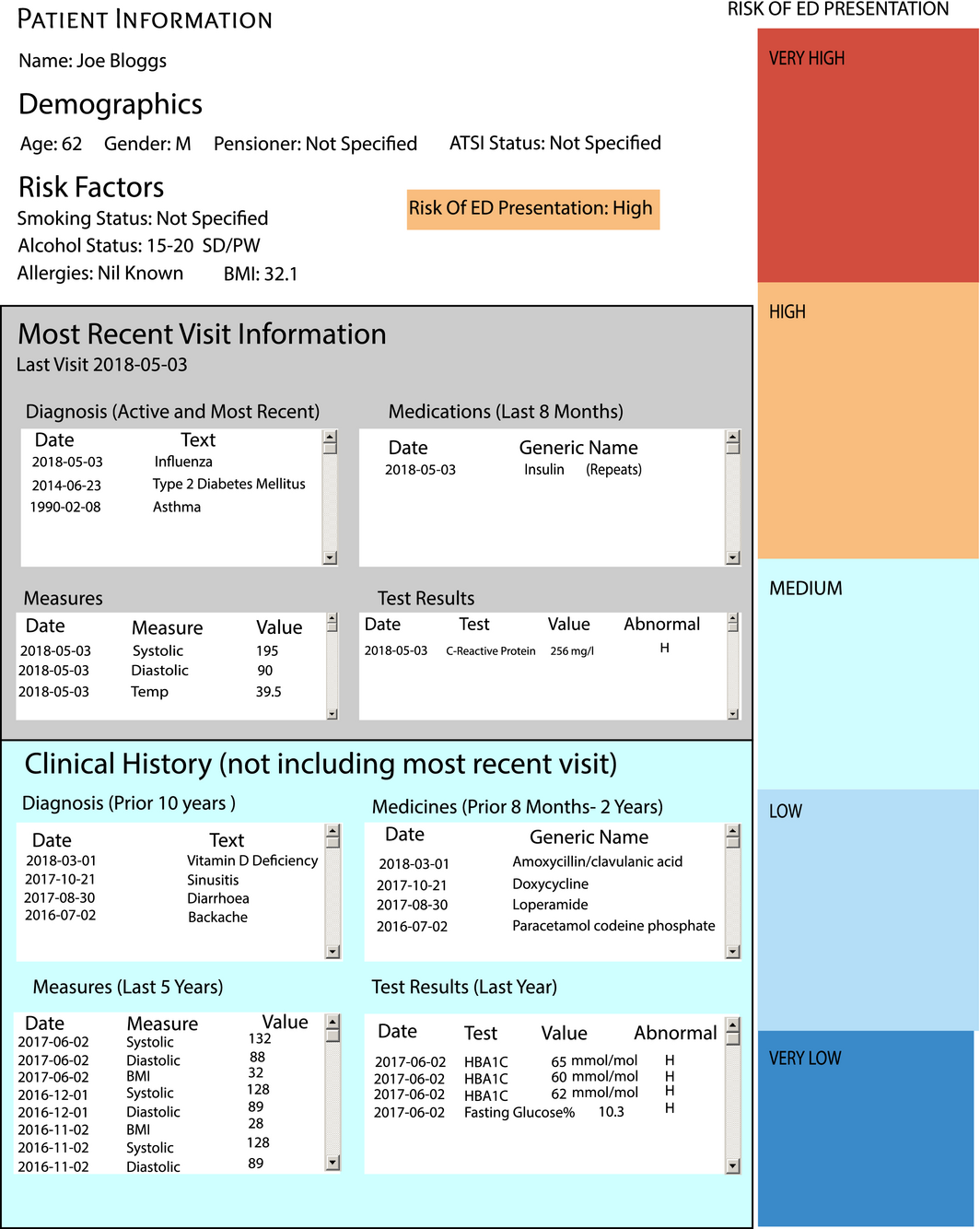

The POLAR Diversion Plan developed an automated decision support tool (DST) for ED admission risk based on general practice clinical and billing data.7 The DST was designed to, at the point of consultation, calculate patient alerts based on general practice data alone. A key strength of this approach is clear: it can be delivered automatically to the GP when the patient is sitting with them, which enables immediate intervention that may reduce risk. An example is shown in Box 1.

This project used machine learning, which is a subset of techniques termed artificial intelligence (advanced computer techniques for processing information). Machine learning has many variations, but they all share an important difference from traditional statistical methods — the ability to make accurate predictions on unseen data.8 Machine learning is also characterised by the ability to continue learning with new information. To optimise the prediction accuracy, often the methods do not attempt to produce interpretable models, which enables machine learning to handle the large numbers of variables that most big datasets have.9,10

By using linked records of all patients admitted to an ED over 5 years, we were able to map the general practice journeys of all those patients. Data from 17 067 GP visits for 8479 unique patients (excluding injury‐based presentations) were used. These data were fed into the machine learning program, and 157 330 individual attribute values were used to train the model.

The output of this was a DST that can be run on the data in a GP's home system and can give a risk profile at the time of consultation. When validated with data that had been withheld (to test the model), the tool had a precision/positive predictive value (PPV) of 74% and recall/sensitivity of 68% for patients who were admitted within 30 days (Box 2). It correctly predicted the risk of a patient attending an ED in the next 30 days with accuracy equivalent to or greater than previously published work. So the accuracy of POLAR at 0–30 days is comparable with that of QAdmissions at 1 year (73% PPV, 70% recall/sensitivity) and PEONY (Predicting Emergency Admissions Over the Next Year) at 1 year (67% PPV, 4% recall/sensitivity).3,5

We converted the raw risk scores generated by the program into three categories — very high risk, high risk or low risk of ED admission — for use at the desktop. The upshot of the accuracy of this tool is that it was deployed, by PHNs involved in the project, to practices during the latter half of 2018. This makes it the largest real‐world deployment of an artificial intelligence tool for primary health care in Australia, and one of the largest in the world.

This type of tool raises two issues: how interactions with data will redefine a meaningful health care system, and the role of computing analytics in the traditionally dyadic doctor–patient relationship.11 In the past, all data about patients could be contained either in their doctor's head or on small pieces of paper. Now patients generate vast amounts of data contained in a digital environment. The “physical patient” must be combined with the “data patient” to become the “patient interactive”.12

Medicine was once the province of tradition and common practice. The introduction of the scientific method created the first systematic way of using data to improve patient care. In the middle of the past century, the development of medical colleges started to further systematise the quality aspects of care. However, as the amount of data increases, our ability (as humans) to process data has diminished. So increasingly we are looking to computing power to help us.

Our view is that the next wave of health care revolution is going to involve artificial intelligence, precision medicine (targeted care using genomic data) and other concepts that have not yet been conceived. Much of the commentary in the clinical press expresses fear, uncertainty and doubt — radiologists will become redundant, robots will replace clinicians, and so on. The reality is much more prosaic and can be positive rather than negative. But the clinical community must come to terms with this new wave, in the same way as it had to come to terms with previous revolutions. The two can exist in harmony, providing support to the growing health care sector and better outcomes for patients.

The artificial intelligence DST that we describe in this article, and the many more that will follow, mean that issues relating to artificial intelligence DSTs are no longer theoretical. These are some of the lessons we learned and views we gained while developing the DST and considering how these types of programs will be used in the future.

- DSTs provide decision support, not decision replacement. While some areas might be more prone to human replacement (radiology is a commonly raised area), DSTs support clinicians at the time of interaction and are deliberately framed to provide risk ratings – it is still up to the doctor–patient dyad to decide what to do. What this might entail should be the subject of post‐implementation study. Individual social circumstance, or subtler medical circumstance, cannot be known by “the machine”, and the relationship should always remain the authority, with the DST assisting the doctor–patient dyad to make a decision. We no longer talk of the algorithm but of the DST.

- Regulation for DSTs is currently absent.13 In deciding to deploy our DST, there was no regulation or guideline to assist us, unlike if we were recommending a new treatment or procedure. Our own governance and clinical risk assessment framed the risk as low, as the recommendation is to the clinician, who must then decide using their usual skills on what to do (or not do).

- Robust ethical considerations are absent, although there has been discussion of computers as “moral actors”.14 Again, by providing advice to the clinician, DSTs are dependent on existing ethical frameworks and rely on doctor–patient relationships. However, this will not last without due consideration of a new ethical framework.

- From a medico‐legal viewpoint, we need to consider who will be responsible for what. What are the implications of a clinician ignoring a DST recommendation? Or following the recommendation and the patient experiencing a bad outcome? And if the DST is wrong, is it the program or the programmer that is held responsible? And is it now a medico‐legal requirement to provide good data?

- Existence of good quality data is vital to DST success.15 But good quality data cannot be mandated — they must be driven by providing clinical utility. Our view is that good quality data are now an essential part of good medical practice, and clinicians who do not create good quality data are not providing good quality care, for a range of reasons such as denying patient access to tools such as DSTs.

- Clinicians need to change their viewpoint of the clinical process. Using DSTs is a new clinical method. Some DSTs will be better than humans at what they do. This does not mean that doctors are missing vital information, it simply means that computing power can now process data and make connections in ways that humans cannot. By the same token, computers lack intuition and the capacity for emotional connections.16 The clinical interaction of the future will acknowledge the strengths of each party (computer, clinician and patient).

Famously, Facebook started with the motto “move fast and break things” — an approach which is the complete antithesis of clinical medicine, which advocates a cautious, tested approach. Neither will be appropriate in the future, as the potential power of computing is applied in earnest. DSTs driven by artificial intelligence are the beginning. The clinical community needs a better understanding of, and opportunity to engage with, these developments. The next wave of innovation in medicine is here. We should invite it in, rather than resist it.

Provenance: Commissioned; externally peer reviewed.

- 1. Artificial intelligence in healthcare: within touching distance [editorial]. Lancet 2017; 390: 2739.

- 2. Mazza D, Pearce C, Turner LR, et al. The Melbourne East Monash General Practice Database (MAGNET): using data from computerised medical records to create a platform for primary care and health services research. J Innov Health Inform 2016; 23: 181.

- 3. Hippisley‐Cox J, Coupland C. Predicting risk of emergency admission to hospital using primary care data: derivation and validation of QAdmissions score. BMJ Open 2013; 3: e003482.

- 4. Billings J, Georghiou T, Blunt I, Bardsley M. Choosing a model to predict hospital admission: an observational study of new variants of predictive models for case finding. BMJ Open 2013; 3: e003352.

- 5. Donnan PT, Dorward DW, Mutch B, Morris AD. Development and validation of a model for predicting emergency admissions over the next year (PEONY): a UK historical cohort study. Arch Intern Med 2008; 168: 1416–1422.

- 6. Wallace E, Stuart E, Vaughan N, et al. Risk prediction models to predict emergency hospital admission in community‐dwelling adults: a systematic review. Med Care 2014; 52: 751–765.

- 7. Pearce C, McLeod A, Rinehart N, et al. POLAR Diversion: using general practice data to calculate risk of emergency department presentation at the time of consultation. App Clin Inform 2019; 10: 151–157.

- 8. Beam AL, Kohane IS. Big data and machine learning in healthcare. JAMA 2018; 319: 1317–1318.

- 9. Bini SA. Artificial intelligence, machine learning, deep learning, and cognitive computing: what do these terms mean and how will they impact health care? J Arthroplasty 2018; 33: 2358–2361.

- 10. Mehta N, Devarakonda MV. Machine learning, natural language programming, and electronic health records: the next step in the artificial intelligence journey? J Allergy Clin Immunol 2018; 141: 2019–2021.e1.

- 11. Scott D, Purves I. Triadic relationship between doctor, computer and patient. Interacting Comput 1996; 8: 347–363.

- 12. Robinson JD. Getting down to business – talk, gaze, and body orientation during openings of doctor–patient consultations. Hum Commun Res 1998; 25: 97–123.

- 13. Coiera EW, Westbrook JI. Should clinical software be regulated [editorial]? Med J Aust 2006; 184: 601–602. https://www.mja.com.au/journal/2006/184/12/should-clinical-software-be-regulated

- 14. Arnold M, Pearce CM. Are technologies innocent? Part one Technol Society Mag 2015; 34: 100–101.

- 15. Andreu‐Perez J, Poon CC, Merrifield RD, et al. Big data for health. IEEE J Biomed Health Inform 2015; 19: 1193–1208.

- 16. Dreyfus HL, Dreyfus SE, Athanasiou T. Mind over machine: the power of human intuition and expertise in the era of the computer. New York: Free Press, 1986.

The DST research described in this article was undertaken with the generous assistance of the HCF Research Foundation.

Christopher Pearce is Chair of the Australian Digital Health Agency (ADHA) My Health Record Improvement Group. Elizabeth Deveny is a member of the ADHA board.