Simulation-based education (SBE) is a rapidly developing discipline that can provide safe and effective learning environments for students.1 Clinical situations for teaching and learning purposes are created using mannequins, part-task trainers, simulated patients or computer-generated simulations.

Changes in health care delivery and medical education in Australia have presented medical schools with considerable challenges in providing suitable clinical experiences in medical curricula. An almost doubling in student numbers, combined with pressure to increase tertiary hospital productivity, has reduced students’ access to hospital patients. The tertiary hospital clinical experience thus no longer meets the needs of the curriculum. In this context, simulated learning environments can contribute to and expand students’ opportunities for gaining clinical skills and experience.1,2

An important influence on the use of SBE is the patient safety agenda. Adverse events and resultant patient harm are often attributed to failures in communication and teamwork. Practice in simulated learning environments can reduce some of the underlying causes of adverse events. The Lucian Leape Institute, in its report Unmet needs: teaching physicians to provide safe patient care,3 recently urged medical schools to take advantage of the rapidly expanding uses of simulation to equip students with the skills required to protect patient safety.

SBE has the potential to provide greater efficiency and rigour compared with learning through opportunistic clinical experiences. Clinical situations and events can be scheduled, observed and then repeated so learning can be consolidated. SBE can also ensure that students have a degree of clinical competence before exposure to real patients. This has positive implications for both patient safety and training time. Furthermore, SBE can enhance the transfer of theoretical knowledge to the clinical context and ease the transition to the clinical years and into the workforce.2 Students can rehearse specific clinical challenges, such as the management of acute heart failure,4 and develop the skills needed for working in a team (Box 1). While experience with real patients will always be fundamental to developing clinical expertise, there are areas in each domain of medical practice in which instruction can be supported and enhanced by SBE.

Simulation of the clinical environment can be achieved in a variety of ways:

Full-body mannequins are physical representations of patients. Their complexity varies from being just the physical shape of a patient to incorporating complex electronic equipment for generating physiological responses. In the latter situation, responses can be controlled manually by an operator or programmed into the simulator to model the effects of pathological states and pharmacological interventions.

Part-task trainers are models used for repeated practice of the technical components of a clinical task. Examples include “arms” for practising intravenous cannulation, head and thorax models for practising airway skills and synthetic skin pads for practising suturing.

Simulated patients, also called “patient actors” or “standardised patients”, are individuals trained to behave in a particular way for clinical interactions.5 They are extensively used for teaching and assessment in medical education, especially for communication skills acquisition, and can provide constructive feedback to students from the patient perspective.6 Specifically trained simulated patients, known as clinical teaching associates, are used in some Australian medical schools to introduce students to sensitive clinical tasks, such as conducting a breast examination or performing a Pap smear.7

Computer-generated simulators are representations of tasks or environments used to facilitate learning. These may be as simple as a computer program to demonstrate the operation of a piece of equipment, such as an anaesthetic machine,8 or as complex as a detailed virtual reality environment in which participants interact with virtual patients or other health professionals (for example, Second Life — a 3-D virtual reality environment used to create a simulated learning experience).9,10

Hybrid simulators are a combination of simulated patients with part-task trainers to contextualise learning. For example, a model wound can be attached to a simulated patient so students can learn both the procedural technique and the associated communication skills and professional behaviours.11 Similarly, a modified stethoscope when placed on a patient actor can be used to simulate specific clinical signs, such as a heart murmur or abnormal breathing sounds.12

“Fidelity” describes the extent to which a simulation represents reality. It also refers to the psychological effect of “immersion” in the situation, or “being there”, and the extent to which the clinical environment is accurately represented.13 The degree of realism of a simulation technique, and thus the choice of simulator device, needs to be carefully matched with educational level, as too much realism and complexity can distract students, especially novices, from learning basic skills.14 For example, the steps for inserting a urinary catheter may be best learnt first using a part-task trainer where each step can be practised. The realism can then be increased by attaching the model to a simulated patient and adding in the requirement to simultaneously use effective communication skills.

A major challenge with teaching and learning in clinical settings is that it is opportunistic and unstructured. This can be overwhelming for students who are often required to attempt tasks for which they are ill-prepared. SBE allows deconstruction of clinical skills into their component parts, so students can be presented with scenarios and tasks appropriate for their stage of learning, thus reducing the cognitive load.15 Without the complexities of dealing with real patients, students can focus on mastering basic skills and can more readily abstract principles from their experiences to apply in other settings.

A key advantage of SBE is the ability to create learning environments that facilitate deliberate practice.16 Students can rehearse their clinical skills within a structured framework in a focused and repetitive manner, thereby refining their skills until their performance becomes fluent and instinctive. Within the limitations of timetabling, practice can be distributed over multiple sessions of short duration, which promotes more meaningful learning than intense practice over fewer, but longer, study periods.17 Existing knowledge and skills can be built on incrementally, and the complexity of tasks calibrated to cater for different learning rates and styles.

The structured nature of SBE can enhance the quality of feedback to students, an important component of skills development and maintenance. It can also encourage reflective practice, whereby students learn to monitor their own performance.18

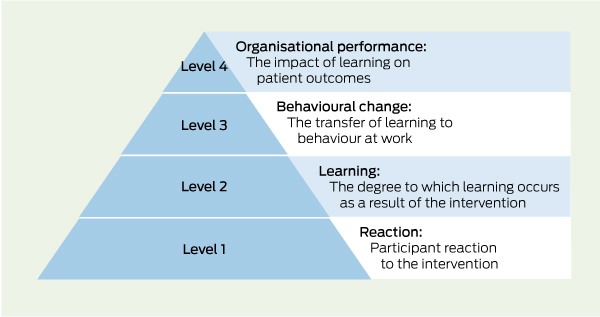

Overall, research shows there is a positive relationship between SBE and learning outcomes. Applying Kirkpatrick’s framework for evaluating the impact of educational programs (Box 2),19 there is growing evidence that using simulation results in participant satisfaction, self-reported increased knowledge and improved performance.20

At present, however, there is a paucity of Kirkpatrick level 4 evidence on the impact of SBE on patient outcomes, but studies are now emerging.21-24,25 New approaches to synthesising data from qualitative and quantitative studies26 are also adding weight to the evidence reported in the research literature.

Overall, the evidence at Kirkpatrick levels 1–3 suggests SBE makes a valuable contribution to learning for students, trainees and clinicians, especially for clinical and procedural skills, clinical decision making, patient-centred and interprofessional communication, and teamwork. Some SBE modes, however, are more suited for developing certain types of knowledge, attitudes and skills than others. For example, using virtual patients has been found to be helpful for developing clinical reasoning skills, but can lead to loss of the concept of patient-centred care.27

What the evidence also shows is that certain features and best practices need to be incorporated into SBE for it to achieve maximum impact. The twelve important features that optimise SBE are listed in Box 3 and explained in detail in the article by McGaghie et al.20

For maximal effectiveness, SBE needs to be integrated into the curriculum and not used as a stand-alone intervention. This is best achieved at the curriculum design phase when educational methods are being identified to meet defined learning objectives. The degree to which SBE can be incorporated into a curriculum will depend on the availability of local resources.28 Strategies that promote the transfer of learning from SBE to clinical practice should be considered when integrating SBE into medical curricula are listed in Box 4.

Implementation of SBE needs a planned, thoughtful and coordinated approach. Currently, there is no cohesive strategy for simulation education and research in Australia, and successful programs depend to a large degree on local enthusiasts. The findings of the Health Workforce Australia (HWA) report on current and potential use of SBE in Australian medical education can be found on the HWA website (www.hwa.gov.au/sites/uploads/simulated-learning-environments-medical-curriculum-report-201108.pdf).

Moving teaching away from the bedside reveals the real costs of medical education, with the need for dedicated teaching spaces, equipment and trained teachers. HWA is supporting the use of simulated learning and is providing funding for SBE, including $46 million capital and $48 million recurrent funding for 2010–11, and $20 million recurrent funding per annum subsequently. This funding is for all health care professions and will significantly enhance SBE capability and capacity in Australia, but the challenge to maintain some national cohesion remains. HWA has undertaken a review of opportunities for SBE to enhance efficiency and effectiveness of training of health professionals, and established a mechanism for SBE initiatives to develop capacity across Australia.29 HWA has also embarked on the development of a training program for simulation instructors.

Most health care facilities will want or need some type of simulation facility. The types of simulators and the staff needed to appropriately run facilities are the real issues. A “hub and spoke” model is likely to be the best solution, with major investment being made in communication technology to ensure effective interactions between facilities. Regional networks could be used to coordinate the development of learning programs, usage and research, but should not discourage local initiatives. Purchase of major infrastructure on a shared basis, and regional or national contracts for servicing of equipment may allow some economies of scale. Regional centres or even a national institute for health simulation could coordinate standards for simulation training programs and staff accreditation.30

In the United States, the Society for Simulation in Healthcare has gone some way towards developing an approach to accreditation for simulation programs, which could serve as a useful model.31 Lessons for further research and development may also be gained from other industries such as defence, aviation and construction.

Health care is increasingly being delivered by teams, and the importance of interprofessional education in improving team collaboration is now recognised in medical school education. Newly graduated doctors and nurses describe major barriers in patient care caused by limited understanding of each others’ roles, lack of task coordination, and ineffective communication strategies.32

Opportunities to undertake teamwork training in real clinical environments are limited. Recent research in simulated settings has shown how information exchanges can be structured to improve communication.33 SBE allows both medical students and qualified doctors to learn and practise the skills of leadership, communication, task coordination and cooperation with other health professionals.

The past 10 years have seen a shift towards requiring practitioners to demonstrate competence in key skills — at medical school, vocational training and specialist training levels. Simulation is increasingly being used for these assessments,34 including at medical student level.35,36 It is likely that competency assessments will become more complex in the future; behavioural aspects and the ability to work in a team will be incorporated into assessments, rather than just technical skills or application of knowledge. There is emerging evidence of the reliability and validity of assessments using simulation for these aspects of medical education.37,38

4 Strategies promoting transition from simulation-based education (SBE) to clinical practice

Align SBE program goals with the needs of individual learners, and with other curriculum goals and activities

Optimise the timing of SBE interventions to fit with learner needs

Provide opportunities for repeated practice with feedback and structured learner reflection to broaden the application of SBE to clinical experiences

Contextualise SBE for immersive simulations by recreating key elements of clinical settings or using simulation in situ

Provide continuity between simulated and clinical learning environments

Maintain close working relationships between clinicians, educators and simulation technicians during the development of SBE programs

Provenance: Commissioned; externally peer reviewed.

- Jennifer M Weller1

- Debra Nestel2

- Stuart D Marshall3

- Peter M Brooks4

- Jennifer J Conn5

- 1 Centre for Medical and Health Sciences Education, University of Auckland, Auckland, NZ.

- 2 Gippsland Medical School, Monash University, Churchill, VIC.

- 3 Simulation and Skills Centre, Southern Health and Monash University, Monash Medical Centre, Melbourne, VIC.

- 4 Australian Health Workforce Institute, University of Melbourne, Melbourne, VIC.

- 5 Department of Medicine, Royal Melbourne Hospital, University of Melbourne, Melbourne, VIC.

No relevant disclosures.

- 1. Ziv A, Wolpe P, Small S, Glick S. Simulation-based medical education: an ethical imperative. Acad Med 2003; 78: 783-788.

- 2. Weller J. Simulation in undergraduate medical education: bridging the gap between theory and practice. Med Educ 2004; 38: 32-38.

- 3. Lucian Leape Institute. Unmet needs: teaching physicians to provide safe patient care. Boston: National Patient Safety Foundation, 2010. http://www.npsf.org/wp-content/uploads/2011/10/LLI-Unmet-Needs-Report.pdf (accessed Apr 2012).

- 4. Kneebone RL. Practice, rehearsal, and performance: an approach for simulation-based surgical and procedure training. JAMA 2009; 302: 1336-1338.

- 5. Barrows H. Simulated patients in medical teaching. CMAJ 1968; 98: 674-676.

- 6. Kneebone R, Arora S, King D, et al. Distributed simulation-accessible immersive training. Med Teach 2010; 32: 65-70.

- 7. Robertson K, Hegarty K, O’Connor V, Gunn J. Women teaching women's health: issues in the establishment of a clinical teaching associate program for the well woman check. Women Health 2003; 37: 49-65. doi: 10.1300/J013v37n04_05.

- 8. Lampotang S, Dobbins W, Good ML, et al. Interactive, web-based educational simulation of an anesthesia machine. J Clin Monit Comput 2000; 16: 56-57.

- 9. Schmidt EA, Scerbo MW, Bliss JP, et al. Surgical skill performance under combat conditions in a virtual environment. Proceedings of the Human Factors and Ergonomics Society 50th Annual Meeting October 2006; 50: 2697-2701. http://pro.sagepub.com/content/50/26/2697.abstract (accessed Apr 2012).

- 10. Honey MLL, Diener S, Connor K, et al. Teaching in virtual space: Second Life simulation for haemorrhage management [interactive session]. Proceedings Ascilite Auckland 2009; 1222-1224. http://www.ascilite.org.au/conferences/auckland09/procs/honey-interactive-session.pdf (accessed Apr 2012).

- 11. Kneebone R, Kidd J, Nestel D, et al. An innovative model for teaching and learning clinical procedures. Med Educ 2002; 36: 628-634.

- 12. Sprick C, Reynolds KJ, Owen H. SimTools: a new paradigm in high fidelity simulation. Stud Health Technol Inform 2008; 132: 481-483.

- 13. Beaubien JM, Baker DP. The use of simulation for training teamwork skills in health care: how long can you go? Qual Saf Health Care 2004; 13 Suppl 1: i51-i56.

- 14. Maran N, Glavin R. Low- to high-fidelity simulation — a continuum of medical education? Med Educ 2003; 37 Suppl 1: 22-28.

- 15. van Merriënboer J, Sweller J. Cognitive load theory and complex learning: recent developments and future directions. Educ Psychol Rev 2005; 17: 147-177.

- 16. Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med 2004; 79 (10 Suppl): S70-S81.

- 17. Ericsson KA, Krampe RT, Tesch-Römer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev 1993; 100: 363-406.

- 18. Mamede S, Schmidt HG. The structure of reflective practice in medicine. Med Educ 2004; 38: 1302-1308.

- 19. Kirkpatrick DL. Evaluating training programmes: the four levels. San Francisco: Berrett-Koehler, 1994.

- 20. McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation-based medical education research, 2003-2009. Med Educ 2010; 44: 50-63.

- 21. Grantcharov T, Kristiansen V, Bendix J, et al. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg 2004; 91: 146-150.

- 22. Chaer R, Derubertis B, Lin S, et al. Simulation improves resident performance in catheter-based intervention: results of a randomized, controlled study. Ann Surg 2006; 244: 343-352.

- 23. Banks E, Pardanani S, King M, et al. A surgical skills laboratory improves residents’ knowledge and performance of episiotomy repair. Am J Obstet Gynecol 2006; 195: 1463-1467.

- 24. Ahlberg G, Hultcrantz R, Jaramillo E, et al. Virtual reality colonoscopy simulation: a compulsory practice for the future colonoscopist? Endoscopy 2005; 37: 1198-1204.

- 25. Frengley R, Weller J, Torrie J, et al. Teaching crisis teamwork: immersive simulation versus case based discussion for intensive care teams. Simulation in Healthcare 2010; 5: 368.

- 26. Pope C, Mays N, Popay J. Synthesising qualitative and quantitative health evidence. A guide to methods. Part 2, Chapter 5. Mixed approaches to evidence synthesis. Berkshire: McGraw-Hill; Open University Press, 2007: 95.

- 27. Lepschy A. Communication training. In: Rickheit G, Strohner H, editors. Handbook of communication competence. Berlin: Mouton de Gruyter, 2008: 315-323.

- 28. Walsh K. Cost effectiveness in medical education. Abingdon, UK: Radcliffe, 2010.

- 29. Health Workforce Australia. Simulated learning environments [website]. http://www.hwa.gov.au/work-programs/clinical-training-reform/simulated-learning-environments-sles (accessed Apr 2012).

- 30. Cumin D, Weller JM, Henderson K, Merry AF. Standards for simulation in anaesthesia: creating confidence in our tools. Br J Anaesth 2010; 105: 45-51.

- 31. Society for Simulation in Healthcare. Council for Accreditation of Healthcare Simulation Programs. https://ssih.org/committees/accreditation (accessed Apr 2012).

- 32. Weller JM, Barrow M, Gasquoine S. Interprofessional collaboration among junior doctors and nurses in the hospital setting. Med Educ 2011; 45: 478-487.

- 33. Marshall S, Harrison J, Flanagan B. The teaching of a structured tool improves the clarity and content of interprofessional clinical communication. Qual Saf Health Care 2009; 18: 137-140.

- 34. Boulet J. Summative assessment in medicine: the promise of simulation for high-stakes evaluation. Acad Emerg Med 2008; 15: 1017-1024.

- 35. Boulet JR, Murray D, Kras J, et al. Reliability and validity of a simulation-based acute care skills assessment for medical students and residents. Anesthesiology 2003; 99: 1270-1280.

- 36. Weller J, Robinson B, Larsen P, Caldwell C. Simulation-based training to improve acute care skills in medical undergraduates. N Z Med J 2004; 117: U1119.

- 37. Weller J, Frengley R, Torrie J, et al. Evaluation of an instrument to measure teamwork in multidisciplinary critical care teams. BMJ Qual Saf 2011; 20: 216-222.

- 38. Weller JM, Robinson BJ, Jolly B, et al. Psychometric characteristics of simulation-based assessment in anaesthesia and accuracy of self-assessed scores. Anaesthesia 2005; 60: 245-250.

Abstract

Simulation-based education (SBE) is a rapidly developing method of supplementing and enhancing the clinical education of medical students.

Clinical situations are simulated for teaching and learning purposes, creating opportunities for deliberate practice of new skills without involving real patients.

Simulation takes many forms, from simple skills training models to computerised full-body mannequins, so that the needs of learners at each stage of their education can be targeted.

Emerging evidence supports the value of simulation as an educational technique; to be effective it needs to be integrated into the curriculum in a way that promotes transfer of the skills learnt to clinical practice.

Currently, SBE initiatives in Australia are fragmented and depend on local enthusiasts; Health Workforce Australia is driving initiatives to develop a more coordinated national approach to optimise the benefits of simulation.